4 Test Administration

Chapter 4 of the Dynamic Learning Maps® (DLM®) Alternate Assessment System 2014–2015 Technical Manual—Integrated Model (Dynamic Learning Maps Consortium, 2016) describes general test administration and monitoring procedures. This chapter describes updated procedures and data collected in 2019–2020.

For a complete description of test administration for DLM assessments, including information on available resources and materials and information on monitoring assessment administration, see the 2014–2015 Technical Manual—Integrated Model (Dynamic Learning Maps Consortium, 2016).

4.1 Overview of Key Administration Features

This section describes DLM test administration for 2019–2020. For a complete description of key administration features, including information on assessment delivery, Kite® Student Portal, and linkage level selection, see Chapter 4 of the 2014–2015 Technical Manual—Integrated Model (Dynamic Learning Maps Consortium, 2016). Additional information about administration can also be found in the Test Administration Manual 2019–2020 (Dynamic Learning Maps Consortium, 2019c) and the Educator Portal User Guide (Dynamic Learning Maps Consortium, 2019b).

4.1.1 Change to Administration Model

Beginning with the 2019–2020 operational year, the former integrated model transitioned to the instructionally embedded model. As a result, administration shifted to two instructionally embedded assessment windows, from the former fall instructionally embedded administration and spring system-delivered administration of a subset of testlets. The decision to transition to two instructionally embedded windows followed multiple rounds of input from state education agency members and the Technical Advisory Committee. The rationale for the transition was to increase the precision in determining what students know and can do by covering the full blueprint in each window. Scoring remained the same and would be based on all available evidence throughout the year. See Chapter 5 of this manual for details on how the assessments are scored.

During the two instructionally embedded windows, teachers had flexibility to choose the Essential Elements (EEs) assessed, within blueprint constraints (e.g., “Choose at least three Essential Elements, including at least one RL and one RI.”). Teachers also choose the linkage level at which each EE was assessed.

4.1.2 Test Windows

The fall instructionally embedded window opened on September 9, 2019 and closed on December 20, 2019. The spring instructionally embedded window opened on February 3, 2020, and closed on May 15, 2020.

The COVID-19 pandemic significantly impacted the spring 2020 window, which resulted in all states ending assessment administration earlier than planned. State education agencies were given the option to continue assessments later in the year, extending to the end of June, however no state education agencies used this option.

4.1.3 The Instruction and Assessment Planner

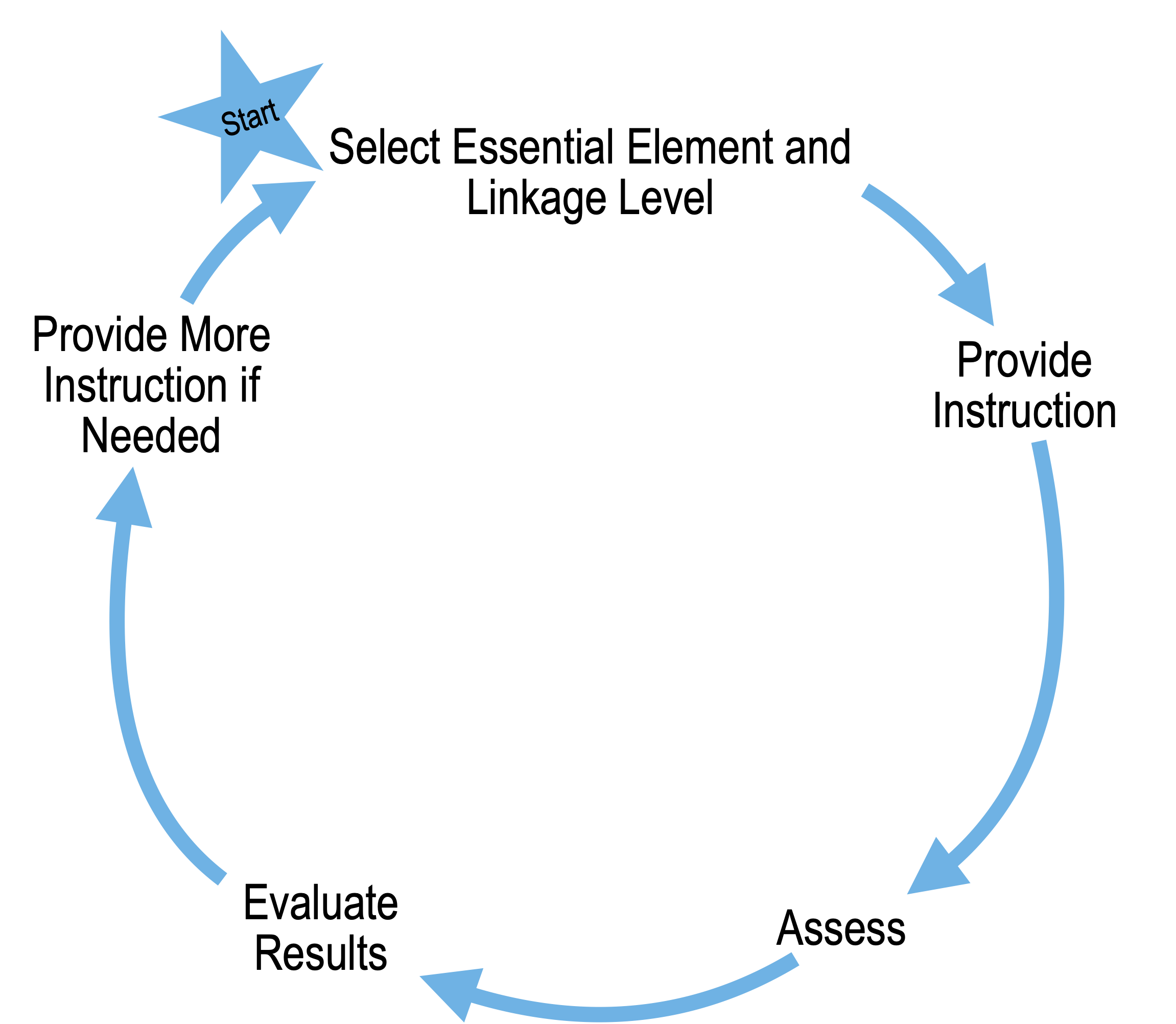

In 2019–2020, the Instructional Tools Interface in Educator Portal within the Kite Suite® was replaced with the Instruction and Assessment Planner (“Planner” hereafter). The Planner is designed to facilitate a cycle of instruction and assessment throughout instructionally embedded administration. Students with the most significant cognitive disabilities are best able to demonstrate what they know and can do using a cyclical approach to their instruction, assessment, and evaluation, as opposed to being assessed at the end of a semester or school year on a mass of instruction they must recall from prior weeks and months (Brookhart & Lazarus, 2017). The instructionally embedded model of the DLM Alternate Assessment encourages this cyclical approach by giving teachers the opportunity to choose an Essential Element(s) and linkage level, develop and deliver instruction for the chosen Essential Element(s), and then assess the student when the teacher determines the student is ready (Figure 4.1). Additional information on the cyclical approach for instructionally embedded assessment can be found in the Test Administration Manual 2019–2020 (Dynamic Learning Maps Consortium, 2019c).

Figure 4.1: Cyclical Approach for Instructionally Embedded Assessment

The goal of the redesign was to build a system to support teaching, learning, the process of administering assessments, and using those results to guide next steps for instruction. To inform the development of the Planner, a teacher cadre was formed at the end of 2018 to gather teacher input and feedback on the interface for administering instructionally embedded assessments. The teacher cadre group met in a series of four sessions with two meeting times per session to allow for flexibility in schedule.

During the first cadre session, a set of wireframes was presented for which teachers provided reactions and feedback. The feedback from each session was used to update the wireframes for the next session. Feedback from the cadre fell into two themes during discussion: visual and process. Visual feedback related to how best to display information, whereas process feedback related to integrating other aspects of the DLM assessment (e.g., the First Contact survey) into the interface to centralize resources for teachers.

The biggest challenge that was identified during the cadre sessions was how best to see and do everything for the student on one page without having to go through several different websites to make a decision about EEs or linkage levels. After hearing this feedback, we decided to design both the visual and process aspects based on the assessment blueprints and blueprint requirements for each subject, which teachers were already familiar with. The cadre also discussed labeling the linkage levels and ordering them from Initial to Successor being helpful since teachers had learned the terms during required training, but didn’t necessarily have that information readily available to reference while creating plans.

The cadre also discussed that the process of creating an instructional plan for a student has several steps. A teacher must first create the instructional plan by selecting the EE and linkage level, then after instruction, update the status to indicate instruction was completed. The system then assigns a testlet that must be administered and completed. During the cadre discussions, teachers expressed the need to have a clear order of steps so they knew what to do next. We discussed having a key in the interface for each status so the teacher knew at which point of the process they were on for each instructional plan.

Finally, the cadre discussed a desire for the interface to report testlet mastery after a testlet is completed. The teachers noted that knowing this information would be helpful for making subsequent instructional plans. Therefore, we introduced a mastery icon for each cell where the testlet was complete that displays if the linkage level was mastered or not for the EE.

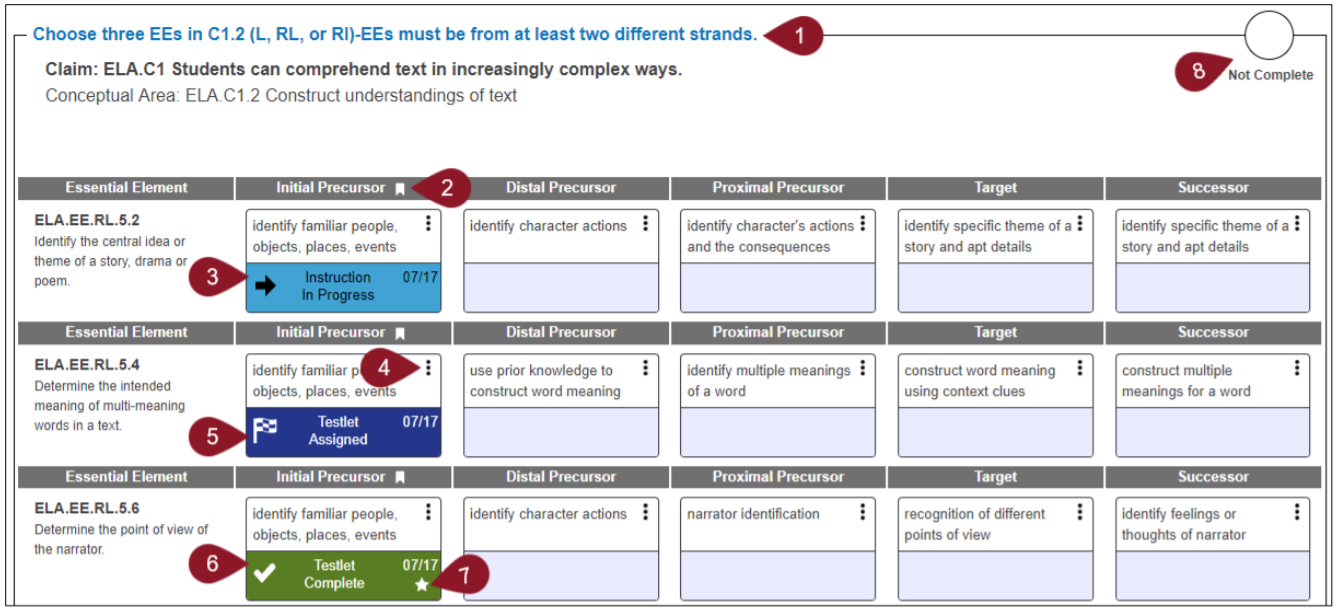

Figure 4.2 displays a screenshot of the final Planner design, with numbered indictors to point out features discussed by the teacher cadre. For example, the Planner includes information about blueprint coverage for each claim or conceptual area (numbers 1 and 8), indicators for the status of the instructional plan (numbers 3, 5, and 6), and testlet mastery information (number 7).

Figure 4.2: Example of the Instruction and Assessment Planner

Note. Numbers indicate key features of the Planner. 1 = Blueprint requirements for the claim or conceptual area; 2 = Indicator for the recommended linkage level; 3 = Indicator that an instructional plan has been created, but the testlet has not been assigned; 4 = Additional information available for the linkage level; 5 = Indicator that instruction has been completed, but the testlet has not been completed; 6 = Indicator that the testlet has been completed; 7 = Indicator for performance on the linkage level where a star represents the student answered 80% of items correctly; 8 = Indicator for whether or not all blueprint requirements have been met for the claim or conceptual area.

4.2 Administration Evidence

This section describes evidence collected during the 2019–2020 operational administration of the DLM alternate assessment. The categories of evidence include data relating the system recommendation of linkage levels for instructionally embedded assessments.

4.2.1 Instructionally Embedded Administration

As part of the transition to two instructionally embedded windows, system-assigned testlets using adaptive delivery are no longer a feature of the spring assessment administration. Rather, teachers manually select the linkage level to be assessed for each selected EE. To support teachers in their decision making, the system provides a recommended linkage level. In the fall instructionally embedded window, the recommended linkage level for a student is determined by the First Contact complexity band and is the same for all EEs in a subject. The correspondence between the First Contact complexity bands and the recommended linkage level is shown in Table 4.1.

| First Contact Complexity Band | Linkage Level |

|---|---|

| Foundational | Initial Precursor |

| 1 | Distal Precursor |

| 2 | Proximal Precursor |

| 3 | Target |

During the spring instructionally embedded window, the recommended linkage level is determined using rules similar to the adaptive routing algorithm that was previously used for system-assigned testlets.

- The spring recommended linkage level was one linkage level higher than the linkage level assessed in fall if the student responded correctly to at least 80% of items. If the assessed fall linkage level was at the highest linkage level (i.e., Successor), the recommendation remained at that level.

- The spring recommended linkage level was one linkage level lower than the linkage level assessed in fall if the student responded correctly to less than 35% of items. If the assessed fall linkage level was at the lowest linkage level (i.e., Initial Precursor), the recommendation remained at that level.

- The spring recommended linkage level was at the same linkage level assessed during the fall window if the student responded correctly to between 35% and 80% of items.

Consistent with fall instructionally embedded administration, teachers select a linkage level for each EE during the spring instructionally embedded administration.

4.2.2 Administration Incidents

As in all previous years, testlet assignment during the 2019–2020 operational assessment administration was monitored to ensure students were correctly assigned to testlets. Administration incidents that have the potential to affect scoring are reported to state education agencies in a supplemental Incident File. No incidents were observed during the 2019–2020 operational assessment windows. Assignment of testlets will continue to be monitored in subsequent years to track any potential incidents and report them to state education agencies.

4.3 Implementation Evidence

This section describes additional resources that were made available during the 2019–2020 operational implementation of the DLM alternate assessment. For evidence relating to user experience and accessibility, see the 2018–2019 Technical Manual Update—Integrated Model (Dynamic Learning Maps Consortium, 2019a).

4.3.1 Data Forensics Monitoring

Beginning with the 2019–2020 administration, two data forensics monitoring reports were made available in Educator Portal. The first report includes information about testlets completed outside of normal business hours. The second report includes information about testlets that were completed within a short period of time.

The Testing Outside of Hours report allows state education agencies to specify days and hours within a day that testlets are expected to be completed. For example, Monday through Friday from 6:00 a.m. to 5:00 p.m. Each state selects their own days and hours for setting expectations. The Testing Outside of Hours report then identifies students who completed assessments outside of the defined expected hours. The report includes the student’s first and last name, district, school, name of the completed testlet, and time the testlet was started and completed. Information in the report is updated at approximately noon and midnight each day, and the report can be viewed by state education agencies in Educator Portal or downloaded as a CSV file.

The Testing Completed in a Short Period of Time report identifies students who completed a testlet within an unexpectedly short period of time. The threshold for inclusion in the report was testlet completion time of less than 30 seconds in mathematics and 60 seconds in English language arts. The report includes the student’s first name, last name, grade, and state student identifier. Also included are the district, school, teacher, name of the completed testlet, number of items on the testlet, an indicator for whether or not all items were answered correctly, the number of seconds for completion of the testlet, and the starting and completion times. Information in the report is updated at approximately noon and midnight each day, and the report can be viewed by state assessment administrators in Educator Portal or downloaded as a CSV file.

4.3.2 Released Testlets

The DLM Alternate Assessment System provides educators and students with the opportunity to preview assessments by using released testlets. A released testlet is a publicly available, sample DLM assessment. Released testlets cover the same content and are in the same format as operational DLM testlets. Students and educators can use released testlets as examples or opportunities for practice. Released testlets are developed using the same standards and methods used to develop testlets for the DLM operational assessments. New released testlets are added on a yearly basis.

In response to state inquiries about supplemental assessment resources to address the increase in remote or disrupted instruction due to COVID-19, the DLM team published additional English language arts, mathematics, and science released testlets during the spring 2020 window. Across all subjects, nearly 50 new released testlets were selected and made available through Kite Student Portal. To help parents and educators better review the available options for released testlets, the DLM team also provided tables for each subject that display the Essential Elements and linkage levels for which released testlets are available.

The test development team selected new released testlets that would have the greatest impact for remote or disrupted instruction. The team prioritized testlets at the Initial Precursor, Distal Precursor, and Proximal Precursor linkage levels, as those linkage levels are used by the greatest number of students. The test development team selected testlets written to Essential Elements that covered common instructional ground, with a consideration for previously released testlets to minimize overlap between the testlet that were already available and new released testlets. The test development team also aimed to provide at least one new released testlet per grade level, where possible.

4.4 Conclusion

During the 2019–2020 academic year, the DLM system transitioned to two instructionally embedded windows with the expectation of teachers covering the full blueprint in each window. The Instruction and Assessment Planner was introduced to better support learning, instruction, and the process of administering DLM testlets. Additionally, new data forensics monitoring reports were made available to state education agencies in Educator Portal. Finally, DLM published additional English language arts, mathematics, and science released testlets during the spring 2020 window to support remote or disrupted instruction resulting from COVID-19. Updated results for administration time, linkage level selection, user experience with the DLM system, and accessibility supports were not provided in this chapter due to limited samples in the spring 2020 window which may not be representative of the full DLM student population. For a summary of these administration features in 2018–2019, see Chapter 4 of the 2018–2019 Technical Manual Update—Integrated Model (Dynamic Learning Maps Consortium, 2019a).